I decided Hacker School would be a great opportunity to learn some iOS frameworks I’ve never worked with before, since I have time for something with a bit of a steeper learning curve right now than I do when I need to finish a product quickly.

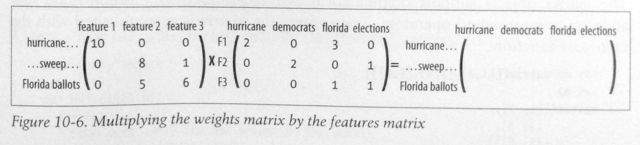

I had an idea for a game/drawing app that would be a simple way to learn some of the animations and custom touch controls that are possible in iOS, so after learning about the different game frameworks available in iOS 8, I decided to start working through a SpriteKit tutorial to get the hang of how to write games.

![]()

Since I’m working in Swift, it was much trickier to get the tutorial code up and running as everything needed to be shifted over to the Swift way of thinking before I could test it out. I got a basic app up and running, but was running into some nightmarish problems with a CGPoint init method when I was doing some vector math. The problem had to do with numerical types, and I finally realized that I was mixing CGFloats with Floats, which in Swift are completely different types.

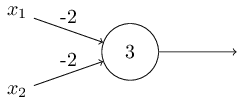

This is what I finally got to compile:

1 2 3 4 5 6 | |

Because I was sending Floats to CGPoint’s init instead of CGFloats, the following caused the compiler error “Could not find an overload for ‘init’ that accepts the supplied arguments”.

1 2 3 4 5 6 | |

The following function resulted in the compiler error “Could not find an overload for ‘/’ that accepts the supplied arguments”, because I was dividing a CGFloat by a Float, which is not allowed in Swift without first converting one of the arguments to be the same type as the other, since otherwise Swift doesn’t know what type the output should be.

1 2 3 4 5 6 | |

The debugging process was pretty frustrating, but after finally figuring out what the problem was with the types I had learned a lot about how Swift works and had a stronger sense of being explicit about the types I was inputting and expecting for each function.

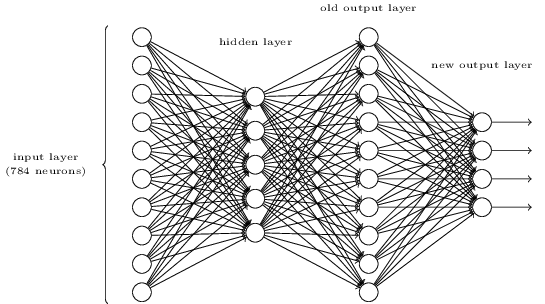

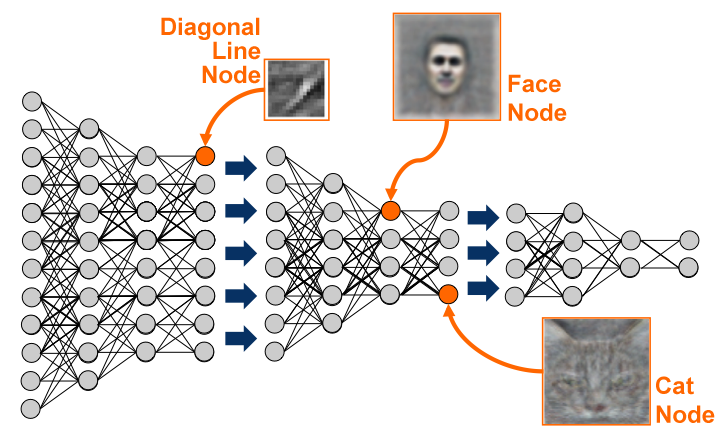

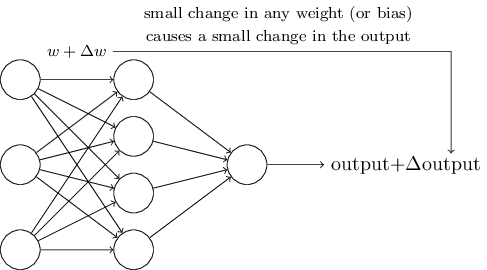

I learned about creating a game scene and adding “nodes” to the scene that can be anything from a label, a player, an enemy, or a projectile, and manipulating them with simple actions like move or rotate. Once I had a sense of the structure of the game, it was a lot clearer how to set up something simple. I’m looking forward to learning more about physics and reacting to different events tomorrow.

![]()

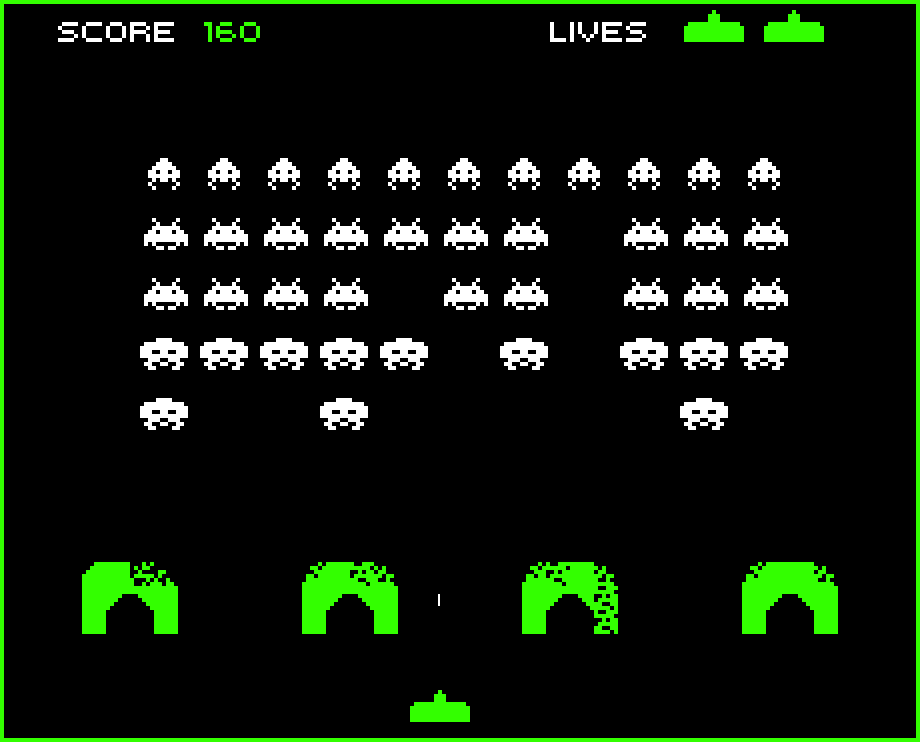

In the afternoon, Mary live-coded a Space Invaders game in Javascript in under an hour. That was pretty cool to watch, and it amazes me how simple she makes the code seem. Watching her quickly create a minimum functional mock-up of the game was very helpful for me as a reminder not to get bogged down in, for example, how the Space Invaders look in version one of a game where your focus is on the functionality of the game and getting that to work. She kept this version simple by using rectangles for the player, bullets, and invaders, and that meant that she could get a basic game laid out very quickly. I like her process of development because all of the functions were laid out in a simple way, and it would be straightforward to move forward and edit some of them to add more complex game play and graphics since the basic functionality is already there.

Moving forward, once I finish with the SpriteKit tutorial game, I’m going to try making a similarly simple version of Space Invaders for iOS using SpriteKit and Swift.

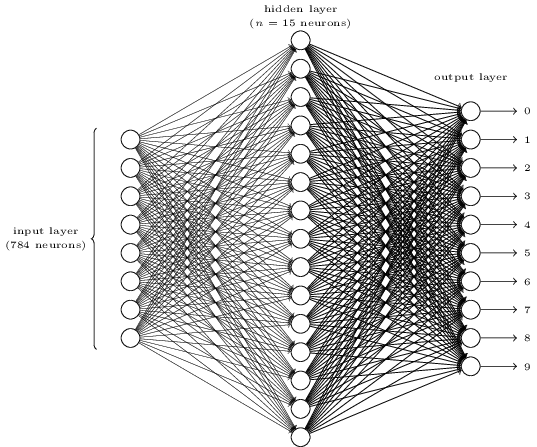

In other news, this is the coolest thing about the Q train!

It’s a series of lit, painted panels on the wall of the subway tunnel between DeKalb and Canal, that’s visible through a series of narrow slits in the tunnel wall. When the train is stopped, it just looks like bright slits, but as soon as the train starts moving, it starts animating flipbook-style. It’s an installation that was put there in the 80s called Masstransiscope, inspired by the Zoetrope of the 1800s. Bill Brand, the artist, had the idea that “Instead of having the film go through the projector, you could move the audience past the film.”