I started working on fixing some of the bugginess of the Multipeer Connectivity framework in ObjC in the morning. I tried to hack it so that Multipeer would continue working when the app was in background mode (not currently possible due to Apple’s imposed limitations), so that we could have a count of how many people were connected to the network.

I also spent some time working on a problem that seems to be coming from issues with lower-level Bluetooth LE frameworks, where there are errors when trying to reconnect to the same peer multiple times after disconnecting. The issues are linked, because there can be problems rejoining the network after the backgrounded app is opened again and tries to reconnect. I suspect it has to do with sessions not fully closing from the first session when the device attempts to start a new session.

I got a much deeper understanding of the capabilities of Multipeer while I was walking through the code handling the sessions and peers within the network, but in the end I concluded that Multipeer is still pretty buggy. I’m reconsidering starting with our own implementation of a mesh network in Bluetooth LE instead of using Multipeer, since it seems like Multipeer doesn’t really allow us to avoid some of the tricky issues we were handling in CoreBluetooth, and possibly the higher-level framework gives us less power to debug and work around some of the problems. CoreBluetooth also possibly avoids some of the issues with Multipeer not working in background mode, since we can set a Bluetooth LE message to broadcast in the background, and detecting a nearby iBeacon should be able to “wake up” an app even if it has been closed by the user. Plus I’ve already played around with CoreBluetooth with my SimpleShare Bluetooth LE Local Sharing project.

I switched tasks to continue working through Programming Collective Intelligence, an amazing book on Machine Learning algorithms. It was wonderful to get into working on Machine Learning again, since it’s what I had originally intended to focus on at Hacker School before I was swept up with Swift.

I worked through the chapter on Non-Negative Matrix Factorization today, which was actually way more awesome than it sounds. You can scan through a bunch of articles and get word counts, just like in an earlier chapter on document classification using a naive Bayesian classifier. The Bayesian classifier used a weighted probability based on the word counts to predict which category a document belonged to out of a set of predetermined category options, and based on initial training data.

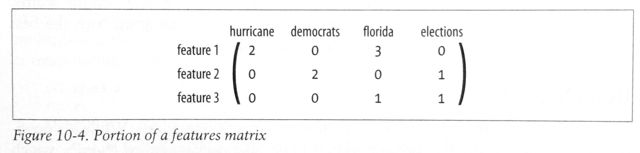

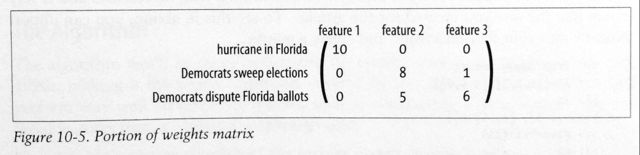

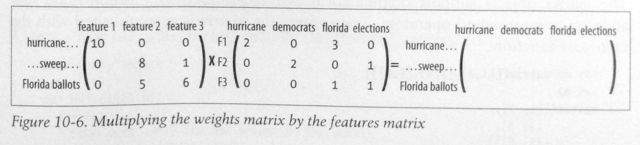

Non-Negative Matrix Factorization is WAY cooler! It starts with the same word-count information for each article set up in a matrix, then factors the matrix into two matrices that, multiplied together, would be approximately equal to the original matrix (articles vs. words). The two factor matrices give you some really neat information about the data set, and they actually extract what the features (categories/themes) are automatically given a set number of desired features. The features can be described by which words are most likely to appear in them. So the first factor matrix has a column for each word and a row for each feature/theme/category, with each number in the matrix being the likelihood that a particular word belongs to that theme, and the second factor matrix has a row for each article and a column for each feature, and the numbers in the matrix correspond to the weight of each theme in that article.

It’s very cool to see how some clever math and optimization can actually allow the algorithm to come up with its own categories/classes of articles (or anything else you’re studying), without having any training data or input from the user about what is expected.

I have one more chapter in the book I’ve been working through that I’m excited to get through tomorrow, on Genetic Programming, which is all about writing programs that can actually modify themselves and evolve the code itself. Super cool!

I’m also looking forward to getting started on learning about Neural Networks more in-depth. I’d really like to understand on a deep conceptual level what neural nets are really doing, and this online book looks like a great way to get started. http://neuralnetworksanddeeplearning.com

I ended the day by doing a short presentation about a beer clustering project I did a couple of months ago in Python. My beer recommendations iOS app generated over 200,000 beer ratings, which has been a pretty cool data set to play around with. I started by scattering beers randomly in a 2 dimensional grid, then moved the points to try to get the distances between each beer to approximate the Pearson distance I calculated by comparing user ratings of all of the beers to find which were most similar (and so should be closer together on the beer map), and which beers were most different, and so should be far apart. I figured out some tricks to break out of the local minimums I kept running into, and ended up with a pretty cool map of many different beers and how they are related by user preference to each other. (The green color represents IBU – International Bittering Units, a measure of bitterness/hoppiness in beer, and the blue color represents ABV, or alcohol content, of each beer.)

It was my first time ever presenting a coding project I’d done in front of a group of people, and it was a cool experience to share something I’d been working on with a group that could follow what I’d done and understand what was neat about it. It was great practice for me in talking about my coding work to a larger group, which I have done much more with my design work than with my programming projects.